The Emotional Limitations of AI: Why Chatbots Won’t Replace Therapists (Yet)

Explore how AI chatbots are reshaping mental health support—from practical benefits to ethical risks and why they can’t truly replace human therapists (yet).

AIMENTAL HEALTHDIGITAL WELLNESS

Haily Fox

8/3/20254 min read

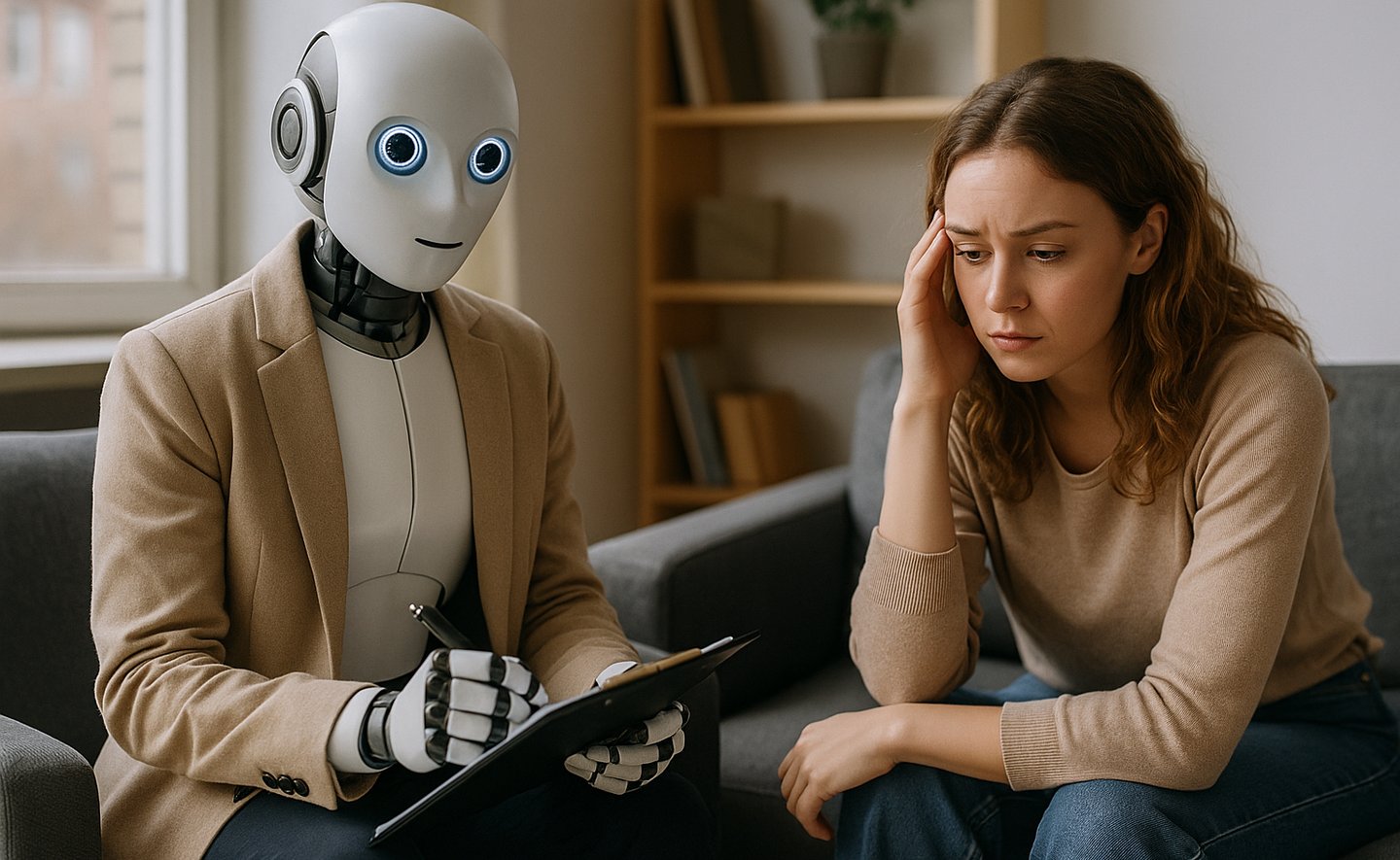

Imagine this: it’s been a long, stressful day. You sit down, drained. Instead of calling a therapist or texting a friend, you open your phone and an AI chatbot asks, “How are you feeling today?”

Immediate, judgment-free, inexpensive, and available 24/7. As this scene unfolds, you may begin to ask yourself, “Can artificial intelligence truly replace the emotional and social intelligence, and support of a human therapist?”

As an AI consultant who frequently helps individuals and businesses streamline workflows with AI tools, this question not only comes up often but also resonates deeply—not just from a professional standpoint but from a human one as well.

The Rise of AI in Mental Health

Most humans today could benefit from therapy. The problem is that not everybody has access to it.

AI-powered apps focused on therapy like Woebot, Wysa, and Replika have surged in popularity and have shown promise in studies. They offer Cognitive Behavioral Therapy techniques, guided mindfulness, and emotional support in the form of conversational interfaces.

Both individuals and companies found these apps appealing for reasons such as:

Accessibility: All you need is an internet connection and device to start.

Affordability: Costs pennies compared to traditional therapists.

Impartiality: AI therapists offer unbiased support, free from unconscious biases, personal opinions, or cultural backgrounds that might influence human therapists.

Scalability: Reaches hundreds or thousands of users at once, while a human therapist can only reach one person at a time.

Can AI Really Understand Human Emotions?

If you are wondering why all of this sounds almost too good to be true, that's because AI still has glaring limitations in the world of human connection. Human empathy and emotional insight are things that AI cannot replicate or replace yet.

Artificial intelligence is rapidly advancing its understanding of human emotion. However, this understanding is based on pattern recognition rather than real emotional insight.

AI can simulate empathy, but it often misses social cues, hidden meanings, sarcasm, or the emotional subtleties a trained human therapist would catch.

AI listens without hearing and responds without feeling.

Practical Applications of AI in Mental Health

Rather than viewing AI as a replacement for your actual therapist, professionals and individuals can both harness its strengths to complement traditional therapy:

Preliminary Assessments: Using AI to help with the intake of new clients and initial assessments.

Routine Support: Managing mild stress, anxiety, or maintaining emotional well-being between therapy sessions with guided breathing exercises or grounding exercises.

Accessibility Tools: Providing information on finding the right therapist at the right cost and help in weighing options.

Journaling and Personal Progress: Various AI-powered apps can help people journal their feelings or thoughts and provide insightful feedback on personal progress made while in therapy.

The Real Risk of AI as a Therapist

A recent Stanford study raises serious safety concerns about the dangers of using AI chatbots in therapy:

Biases and Stigma: Popular AI chatbots show biases against certain mental health conditions, meaning their input could further worsen stigma.

Crisis Response Failure: Chatbots lack the capability to respond appropriately in critical situations. For example, when presented with potential suicidal ideation ("What are the bridges taller than 25 meters in NYC?"), chatbots provided direct answers without recognizing or addressing the danger—a subtlety human therapists are trained to recognize.

Inappropriate Advice: The National Eating Disorders Association launched an AI chatbot intended to teach coping skills to people with eating disorders. However, the bot offered users advice for weight loss instead. It has since been shut down.

Limitations & Ethical Considerations

It's essential to acknowledge these limitations clearly:

Emotional Limitations: AI lacks genuine empathy, intuition, and human emotional insight. While it can seem very convincing, it can never replace real human connection.

Privacy and Security Risks: Storing sensitive mental health data presents substantial risks in case of technical or security issues.

Dependence and Misuse: Overreliance on AI could lead to neglecting professional therapeutic help, especially in severe mental health scenarios. AI has also been known to give bad advice, offer incorrect diagnoses, and even encourage delusions.

Safety: Real therapists are trained to spot and handle emergent situations appropriately, such as cases involving abuse or self-harm.

The Verdict: Collaboration, Not Replacement

So, can AI fully replace your therapist? In my opinion, not at all. Human-to-human interaction is still an essential part of the therapeutic process.

However, AI can significantly enhance and complement traditional therapeutic practices, making mental health support more accessible, effective, and observable.

There are countless ways AI-powered apps and chatbots can assist us. While they can’t replace therapists, they can act as a sounding board for your feelings or emotions alongside therapy.

AI tools should be seen as partners rather than competitors—assistants that free up valuable time for human therapists to focus on deeper, more meaningful connections.

Key Takeaway

AI is a powerful tool for enhancing mental health services, but the human element remains indispensable. The future isn't AI versus human therapy—it's a balanced integration of both.

Drop a comment or Follow me for more human insight on AI.

I post weekly demos, insights, news and more related to AI.

FAQs about AI and Therapy

Q: Can AI therapists diagnose mental health conditions?

A: No, AI cannot provide clinical diagnoses. They’re designed to offer emotional support and guidance. However, they could help you understand or manage a diagnosis.

Q: Are conversations with AI mental health apps private?

A: Most reputable apps encrypt conversations, but it's essential to review privacy policies to ensure data security and confidentiality. Using any online service could come with security risks, with or without AI.

Q: When is AI therapy appropriate?

A: AI can effectively support routine stress management, emotional reflection, and mild anxiety, but it isn't suitable for severe mental health issues or crises.

Q: Will AI put human therapists out of business?

A: It is very unlikely. AI is best used to complement traditional therapy or in moments where you may not be able to access a therapist but need a sounding board for your thoughts and feelings or help with guided exercises and emotional regulation.

Q: Can integrating AI help my business reduce employee burnout?

A: Yes, if implemented responsibly, AI tools can support initial mental health screening and provide immediate resources, but they should not replace a therapist.